My goal for this blog is to discuss brand-new issues arising from the latest releases of AI, and give some background from my desk research and some opinion from my perspective. I’ve been meaning to write a post on “Safety” and yesterday Ezra Klein provided the “real time” opening for this topic.

In his piece The Imminent Danger of A.I. Is One We’re Not Talking About Klein refers to what I’ll call the “Sydney Affair” that I’ve written about previously – the Kevin Roose interview and its fallout, but Klein goes on to call attention to the broader problem, the imminent danger of AI as another tool in the hands of capitalism, working for the benefit of capitalists, and to the detriment of society at large.

While Klein uses the term “danger” appropriately, I’ll use his story to introduce the top two terms of art that I’m seeing in my AI research: Safety and Alignment.

Safety generally refers to addressing the danger of human-level AI, or artificial general intelligence (AGI). The classic example of HAL. The AI “taking over”.

Alignment generally refers to the more immediate concerns about AI, such as bias, discrimination, transparency, and fairness.

OpenAI is the company that produced Chat GPT, the technology that Microsoft is incorporating into its Bing search engine, which led to the Sydney Affair. Roose’s interview and story were right after Valentine’s Day, February 14.

On February 16, OpenAI posted a new paper entitled: “How should AI systems behave, and who should decide? (openai.com) The paper is primarily a discussion of Alignment issues such as those raised from recent releases of Chat GPT.

On February 24, OpenAI posted another new paper, entitled: Planning for AGI and beyond (openai.com) This paper gets into the Safety concerns for a future in which AI reaches AGI status, which OpenAI defines as “highly autonomous systems that outperform humans at most economically valuable work.”

The good news is that these papers are reasonably responsive to Ezra Klein’s concerns, and OpenAI is the leading company producing the most powerful LLMs. Furthermore, OpenAI has non-profit governance, which speaks to Klein’s fear of AI in the hands of capitalism.

The bad news is that Microsoft, Google, an other leaders in the development of powerful AI are not non-profits. So the policies that OpenAI President Sam Altman espouse for OpenAI, and his aspiration of engagement with governments and regulators, will need to be taken up by governments and regulators, and formulated to apply to for-profit enterprises.

The questions around Safety and Alignment are not new, but we see the events of February, 2023 bringing them to the surface with new, specific examples of AI behaving badly, giving new life to old fears. It is very rational to take in objective evidence of the sort we are collecting, and the sort I’m writing about, and to use this evidence to bolster the justification that our past fears have merit, and that current events should drive urgent action in response.

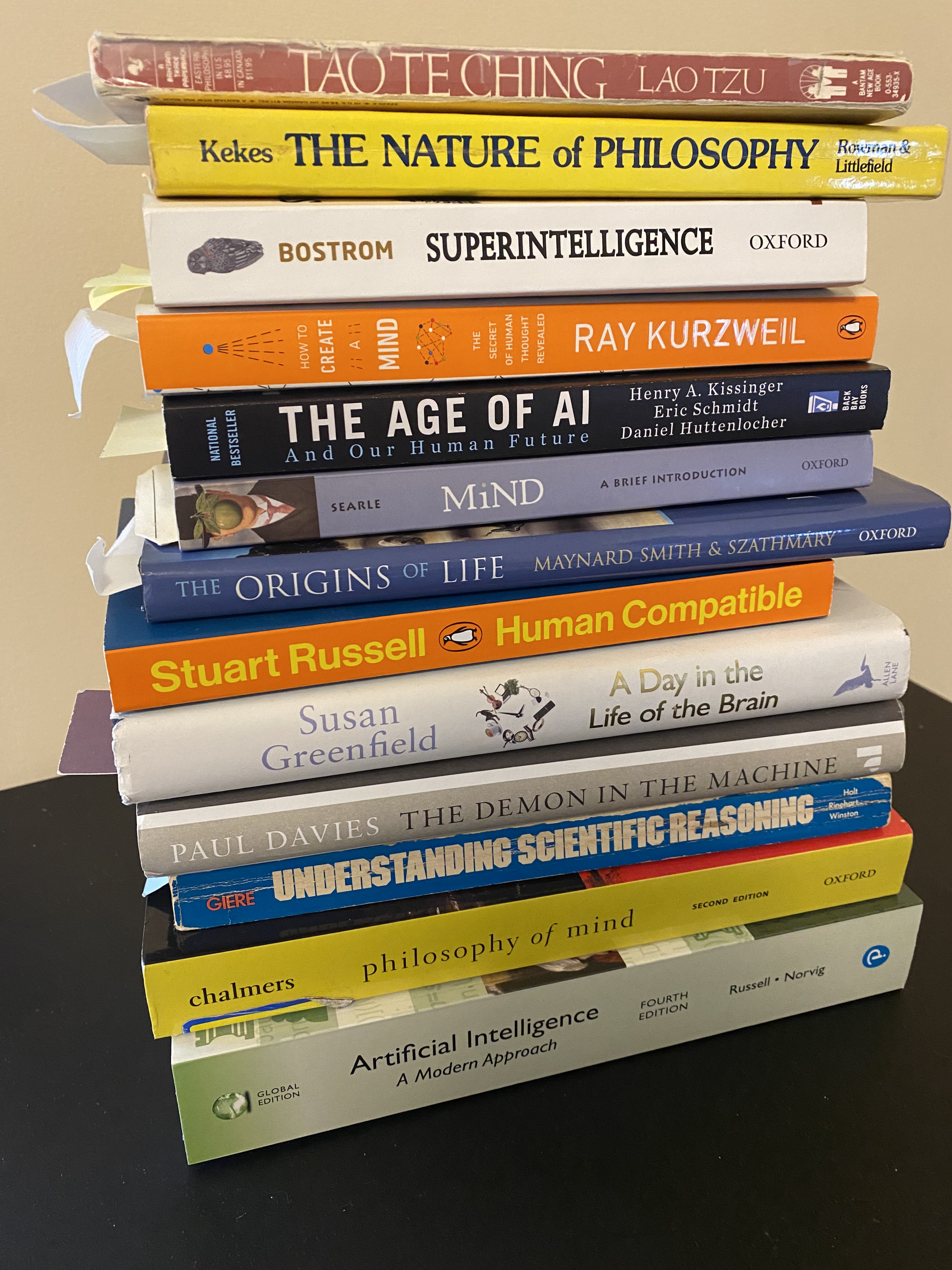

Numerous books have been written on these topics. “Superintelligence” by Nick Bostrom, published in 2014 is primarily focused on the Safety problem. “The Age of AI” published in 2021, by Henry Kissinger, Eric Schmidt, and Daniel Huttenlocher, includes a discussion of the AI Arms race in comparison to the Cold War nuclear arms race, and lessons from our experience with keeping the nuclear threat at bay (so far).

“Human Compatible: Artificial Intelligence and the problem of control”, published in 2019, by Stuart Russell, gives an insider look, as Russell is the co-author of the seminal work “Artificial Intelligence, A Modern Approach”, first published in 1995, by Russell and Peter Norvig.

My aim for today’s post is to: 1) Say something that is responsive to Klein’s opinion piece 2) Sketch the outlines of the Safety and Alignment issues, including the important updates from Open AI 3) Point out that there is a large body of work that is responsive to the concerns raised by Klein’s NYT piece 4) Make the connection between all of the above and recent US government responses that I wrote about in recent posts, including a case currently before the US Supreme Court and a recent proposal by Congressman Ted Liu calling for Congress to start taking action to assess and address the risks of AI that should be taken up by Congress.

I haven’t gone below the surface into what these efforts at Alignment and Safety look like. I’ll look for the right current events to come back to them later. I think Klein’s article and Ted Liu’s Opinion piece both have the same “call to action” to the general public and to the appropriate policy makers, that it’s time to get to work on this problem. I hope the sort of summary I’ve written today will be helpful for people who want to “get up to speed” on both what is happening, and what resources are available to inform the debate that needs to take place.

I hope you’ll Comment on what I’ve said so far, ask questions, and forward this to anyone you think might be interested. (no email required to post a comment!)

Leave a comment