My first post was about a children’s story I had Chat GPT write, with my instructions, for my grandson. I wrote about the mixed feelings about my role in “creating” the story. In the following days, I read about a controversy over someone who had written and published children’s stories using Chat GPT. The central point in contention was authorship – the man who signed his name as author of the story was not a legitimate author, in the eyes of his critics.

On the other hand, I’ve seen numerous news stories and opinion pieces by journalists and others in which they say, either right up front, or as a twist at the end, that they used Chat GPT to write the story published under their name. And with that full disclosure, it seemed entirely appropriate.

So what does “authorship” mean, in a world in which a bot is capable of writing a substantial story or essay? This is clearly a problem that has already caused disruption, and will certainly continue to do so as the technology gains more widespread use, and as the quality of its output continues to improve.

After the news about Bard and Bing, I noted that Chat GPT is good at fiction, because that type of writing is less prone to the sort of factual errors that Chat GPT, Bing, and Bard are making in their current form. I wondered how it would affect fiction writers, in particular. It seemed as though fiction-writing was more endangered. A few days later came an answer to my question from the world of Science Fiction writing.

From the Kelsey Ables at Washington Post, about the magazine Clarkesworld:

“Flooded with AI-created content, a sci-fi magazine suspends submissions”

Washington Post

“Submissions are currently closed. It shouldn’t be hard to guess why,” editor Neil Clarke wrote in a tweet thread, joining the sometimes-heated discourse about the promises, perils and literary potential of AI.”

Chat GPT is good at sci-fi, and sadly, there are too many people willing to submit Chat GPT-generated stories in the hopes of making money if the stories get published. So this is a terrible blow to aspiring sci-fi writers that is happening right now. Clarke has been overwhelmed – his submission process literally brought to a grinding halt, until he figures out a solution.

And the problem extends beyond sci-fi. According to Reuters “Some have even started coaching aspiring writers to use Chat GPT as a “creative partner.” Interesting. That’s what I did writing a children’s story for my grandson. But I did it as a demonstration to my family, the first time I showed them Chat GPT, not as a commercial endeavor.

If there’s money to be made from “content” people are certain to try to take the shortcut offered by Chat GPT. While doing research on AI Safety for a piece I want to write in the near future, I read a paper from OpenAI on a workshop they did in 2020. The report is entitled: Understanding the Capabilities, Limitations, and Societal impact of Large Language Models.

In a section on the “threat landscape” was this question:

“Do we need to spend more time worrying about how models like this could be used by profit-driven actors to generate lots of low-grade spam, or should we be more worried about state-based actors using models to generate persuasive text for use in disinformation campaigns?”

In 2020, OpenAI had anticipated something similar to the sort of disruption at Clarkesworld and the sci-fi writing community are experiencing in February of 2023. But perhaps they didn’t anticipate that for some sorts of fiction, the output would not be obvious ”low-grade spam.” The fact that the bot is getting better makes the problem worse.

How does it feel to be an aspiring sci-fi writer right now? Terrible, I would suppose.

But what about other aspiring writers? What about me? Chat GPT came out, and then I started a blog!

It made me think about my writing. I used Chat GPT for the children’s story, and then for a couple of references on split-brain and dissociative disorder, but I pointed those out when I did it.

It occurred to me that I am in competition with Chat GPT. If I write a story that looks like it could have been generated by Chat GPT, I have not written something valuable.

I’ve seen a lot of “Imposter Syndrome” references in LinkedIn posts related to layoffs, or starting a new job. Imposter syndrome is common for anyone who wonders if they’re good enough at what they do. For aspiring writers, Chat GPT adds fuel to the fire. Am I a good enough writer? Can I differentiate myself from that competitor?

So it seems to me that I have to be more careful about what I write. It can’t look like Chat GPT could have done it, if I want anyone beyond friends and family to read my blog.

Today I write about being self-conscious about writing. AI can’t do that. The feeling of ‘imposter syndrome’ is uniquely human. An AI can’t feel it, because it can’t feel anything. It can’t be self-conscious because it does not have a ‘self’ and it is not conscious.

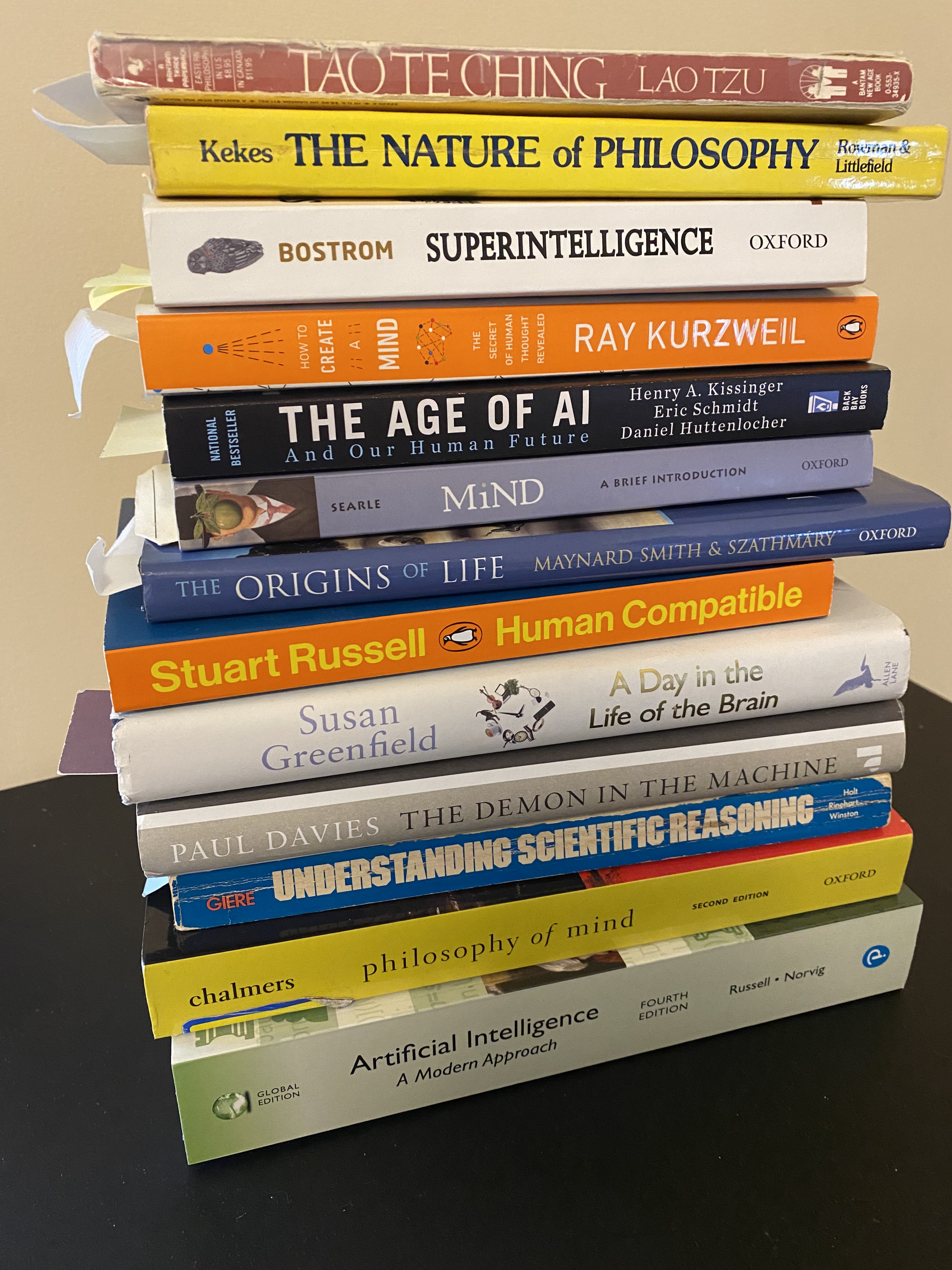

Next, I’m planning to write a piece on why AI is not sentient. Why it is not conscious.

Let me know what you think about what’s happening in the world with the rise of AI, what topics you’re interested in. I’d like to hear from you. Leave a comment below, or send me an email: dcr@mindspring.com

Leave a comment