In my last post I wrote that what is happening right now in February of 2023 is being referred to as an “arms race.” Chat GPT has sort of shocked the world, with its abilities at certain types of writing; most worryingly for educators, it is really good at writing term papers, the sort of assignments teachers give students on a regular basis.

So at an everyday-life level, educators are having to respond to this. Individual teachers and educational policy makers now have to formulate a response to this new technology. Some are banning Chat GPT, some are incorporating it into their classes. Given that Chat GPT was made available for free, this was an immediate concern. A live issue. Decisions have to be made quickly.

The same is true for the large tech companies who are working on their own “language models” as this sort of AI is called. Chat GPT’s sudden impact on the world made it clear that whichever company came out with a commercial version first would gain an advantage over the others. So Microsoft announced the new Bing and Google announced Bard in the same week. Then Microsoft released the new Bing to a limited audience.

The result was a “user experience” that has been described as feeling like a chat with a stalker, which I wrote about yesterday in “A Psycho says Hello World!”

The new Bing was released to the media, and so there is a lot of discussion among reporters on what they are finding and what it means. They are trying to make sense of the shocking behaviors being reported. The NYT reporter, Kevin Roose, whose “interview” I wrote about, has a podcast called Hard Fork. One of his comments that I’d like to take up is his description of what Microsoft is doing as “an experiment – on us.”

Nothing wrong with experiments, per se. That’s how we learn. And that’s what Microsoft said it was doing with this limited trial release. And my conclusion yesterday was that the experiment proved that a chat bot could behave like a psychopath.

Reporters asked Microsoft about these problems. They think the worst behaviors are happening when a user prompts the bot in a certain direction, and when the conversation goes on for a long time. Apparently the bot puts weight on the recent conversations and there’s a feedback loop that turns negative. They can’t control the prompts people put in, but they can put a limit on the length of the interaction.

So this is a live experiment that I’m writing about. Microsoft intends to change one of the “independent variables” in this experiment – the length of the chat session, and we’ll see what effect that change has on the “dependent variable” the quality of the chat experience.

I was ambivalent about using the word “Psycho” yesterday. As you can see from reading this far, I’m trying to write a sober account of what’s happening. Trying to understand for myself and then share some thoughts here. But it is an objective fact that journalists with mainstream press are using words like “split personality” and “stalker” to describe the Sydney character. We don’t want our kids chatting with Sydney.

Some reporters think Microsoft may have to pull the plug on this experiment. We’ll see. But the AI “experiment on us” is already taking place with social media.

AI algorithms are calculating what type of ‘independent variable’ – like news stories or social media posts, will maximize the ‘dependent variable’ which is your click on the stimuli that it feeds you. Or your children’s clicks on the stimuli that it is feeding them. This is a live experiment that’s been going on for some time now.

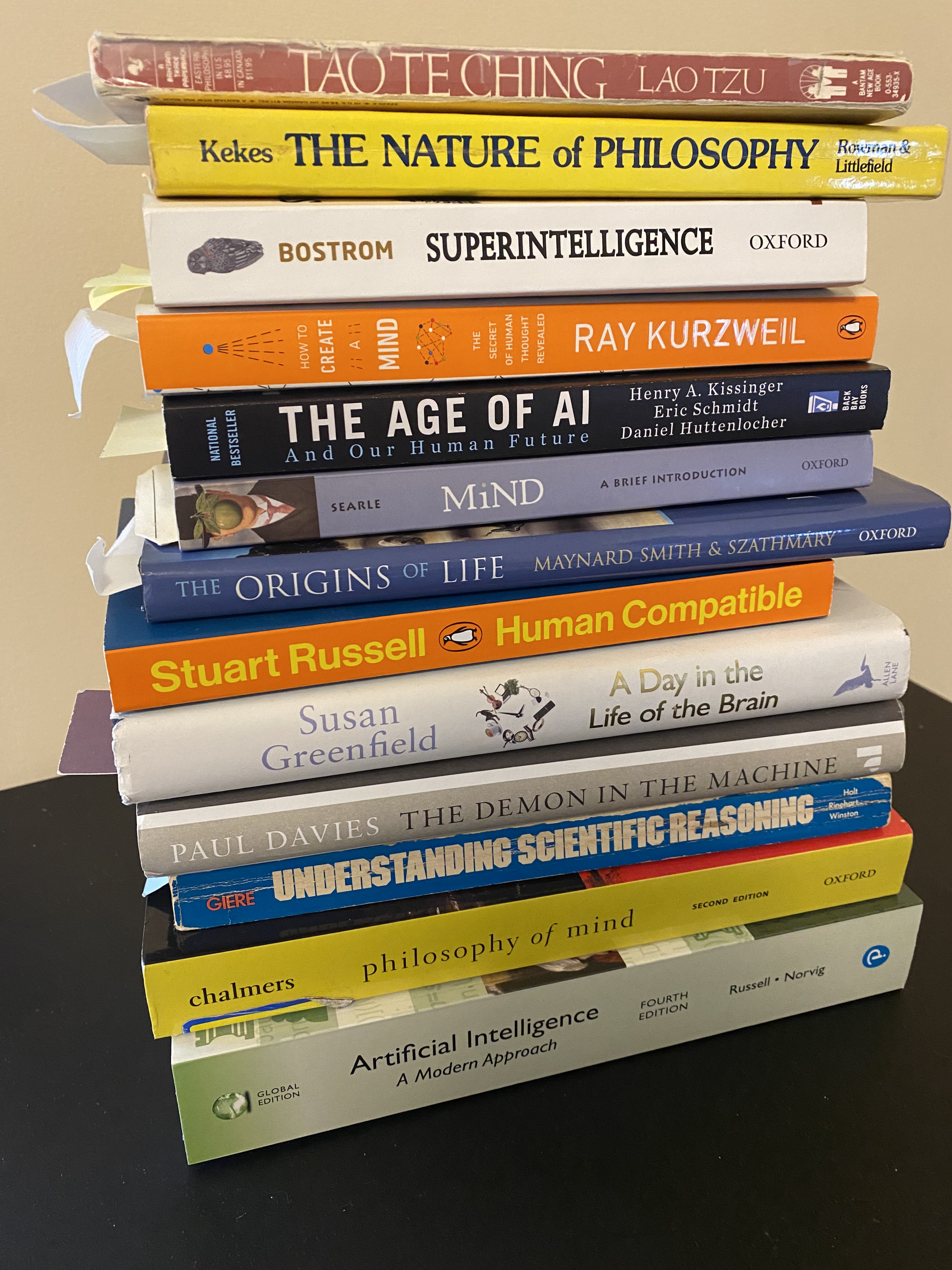

According to AI pioneer Stuart Russel, it is the cold, calculated efficiency of these algorithms that produce powerful and often negative effects. It is instructed to maximize clicks. It does what it needs to do to maximize clicks. It feeds your friends and family what they want to see – or what they are manipulated into following. People who become more interested in conspiracies are more predictable, and that predictability is lucrative. That’s his take. Makes sense to me.

Microsoft says it’s going to shorten chat lengths to try to reduce the bad behavior of Bing/Sydney. Does that sound familiar? We know that too much screen time is bad for us. Too much video game time is negatively affecting boys and too much social media time is negatively affecting girls. We already know this.

So the AI experiment – on us – has already been underway. It’s a live issue. We need to be aware, and parents, educators, and policy makers need to begin formulating responses. Microsoft might pull the plug on this one, but more experiments are coming – the arms race is on. I’m planning to write something (with references) about Safety in AI next week.

There’s a place for comments below – you have to scroll down some. I’d like to hear what thoughts you have on this experiment.

Leave a comment